At Konvu, we help organizations efficiently triage vulnerabilities discovered by Software Composition Analysis (SCA) tools. A critical piece of our platform is our runtime sensor, designed to instrument potentially vulnerable applications at runtime.

We know firsthand how frustrating it can be to manually deploy instrumentation agents across many workloads. To solve this, we've built a Kubernetes Mutating Admission Webhook that injects our sensor automatically, without burdening application teams or requiring changes to deployment manifests.

This post isn't just a walkthrough of how admission webhooks work; it's a deep dive into the practical realities of running them in production. From bootstrapping pitfalls to namespace scoping, here are some lessons we've learned deploying our own webhook across our own clusters.

Admission Controllers 101

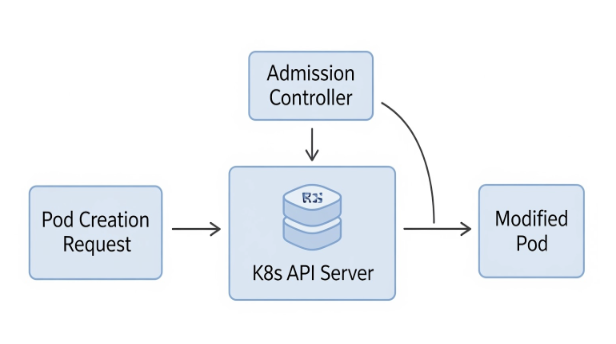

Admission controllers are Kubernetes API plugins that intercept resource creation or update requests after authentication, but before the object is persisted to etcd.

They come in two flavors:

- Validating controllers: Allow or deny a request (e.g. ensuring labels or preventing privileged containers).

- Mutating controllers: Modify the request payload (e.g. injecting containers or environment variables in pods).

Our webhook falls into the second category. The K8S API server will call all mutating webhooks first, then validating webhooks (to avoid having a mutating webhook bypassing validation checks).

Our Use Case: Agent Injection via Admission Webhook

Deploying application performance monitoring (APM) agents or runtime instrumentation tools in Kubernetes usually comes with a lot of friction. The traditional path often requires baking agents directly into Docker images, or wrapping application start commands with bootstrap scripts that preload the agent. Both approaches come with major downsides:

- They force changes to application build pipelines, which means buy-in from each app team.

- They introduce drift; different services might run different agent versions depending on when their image was last rebuilt.

- They slow down adoption; every team has to stop what they're doing and learn how to add the agent properly.

For us at Konvu, this complexity was unacceptable. If our runtime sensor is to provide value in real-life environments, it must be as easy as possible to deploy. No custom builds. No manual steps. No "works on staging but not in prod."

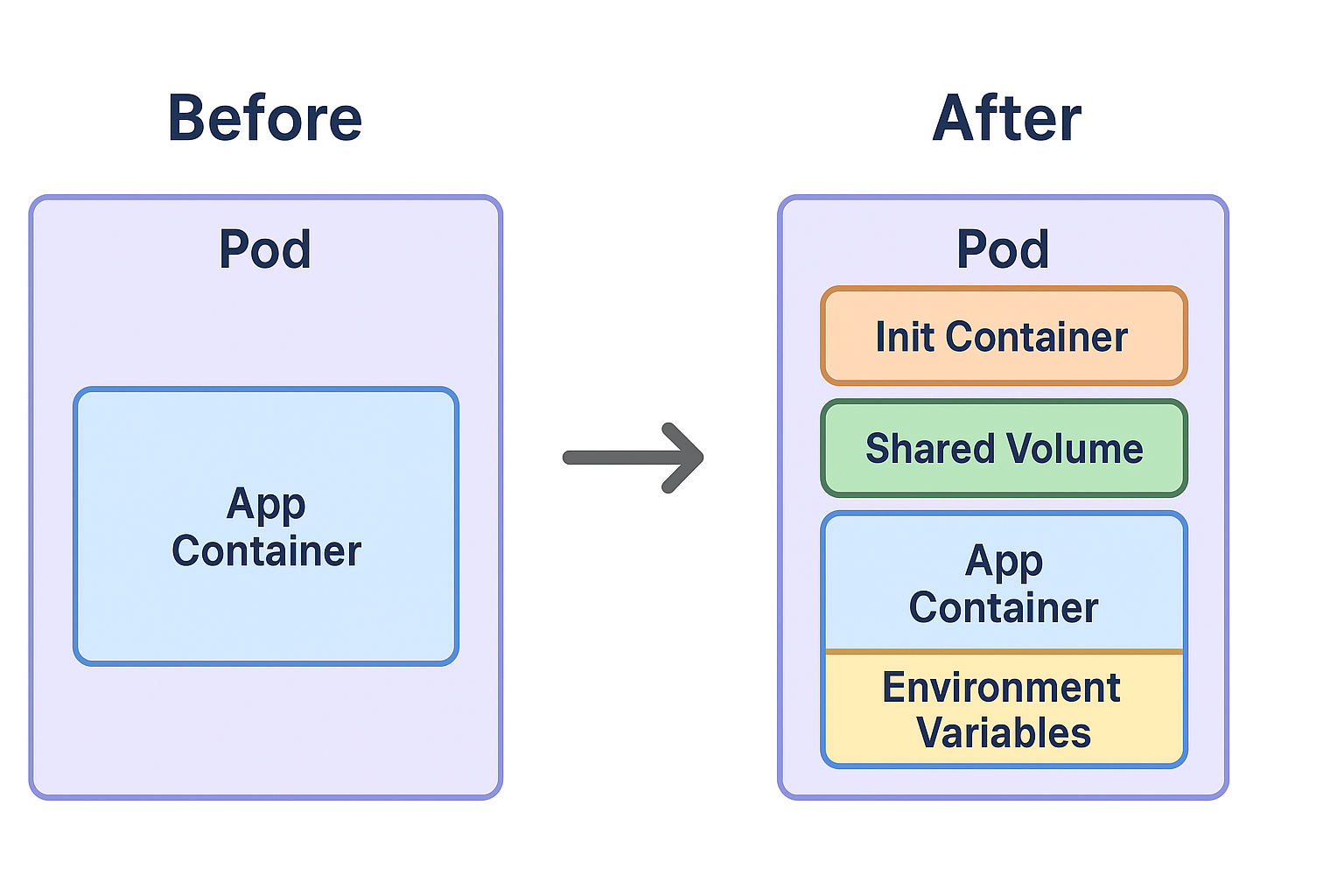

That's where mutating admission webhooks shine. By hooking into the pod creation process itself, we can inject everything we need automatically:

- A shared volume to host the agent.

- An init container to download and configure the right version of the runtime sensor.

- The required environment variables so the app container loads the agent seamlessly at startup.

This means developers don't have to touch their Dockerfiles or entrypoint scripts. They can deploy the same application image as always, and the platform guarantees that the runtime sensor will be there, configured, and up-to-date.

In other words, the admission webhook makes our runtime sensor zero-friction. It aligns with the way modern Kubernetes platforms operate: pushing cross-cutting concerns (like security, observability, and compliance) into cluster-level automation rather than relying on individual teams to implement them correctly.

This enables us to instrument workloads automatically across namespaces, without changing app manifests, without requiring per-team setup, and without writing a single kubectl patch.

We package the webhook and all supporting components in a Helm chart, so deploying it is frictionless for users.

How a Mutating Admission Webhook Works Under the Hood

At first glance, the idea of "mutating" pods on the fly might sound intimidating. But in practice, the workflow is elegant and straightforward. It's Kubernetes doing what it does best: intercepting requests and giving operators a chance to enrich them before they land.

When a developer (or CI/CD pipeline) submits a pod creation request, the Kubernetes API server becomes the traffic controller. Instead of simply persisting the request, it pauses for a moment and asks: "Do any of my registered webhooks want to adjust this object before I move on?"

That's when our Mutating Admission Webhook comes into play. The API server sends it the full pod specification. The webhook reviews the spec, decides if the pod matches our injection rules (for example, if it carries a specific annotation), and, if so, it replies with an adjusted version of the pod spec. The key here: the webhook doesn't create or own the pod. It just hands back a recipe that's been lightly modified. The API server then takes this patched version and continues as usual, persisting it into etcd and letting the scheduler do its thing.

The beauty is in the transparency: from a developer's perspective, nothing changes. They submit a pod, and Kubernetes creates it. Behind the curtain, the webhook has done a little magic to make sure the pod includes our init container, shared volume, and environment variables. All without extra YAML in their manifests.

Practical Pitfalls (And How We Solved Them)

We've deployed our agent injector in production across multiple namespaces and workloads. It works well now, but getting there involved solving some tricky issues that most documentation and blog posts fail to mention.

1. Startup Order Matters: Avoiding Cluster Boot Deadlocks

The Problem

If your webhook intercepts every pod, including system pods or dependencies of the webhook itself, you can end up in a circular dependency:

- Webhook is needed to start Pod A (e.g. controller or ingress)

- But Pod A is needed for the webhook to run (e.g. storage class or service mesh)

- → Cluster stuck in startup loop

Our Approach

We explicitly exclude system-critical namespaces in our MutatingWebhookConfiguration:

namespaceSelector:matchExpressions:- key: kubernetes.io/metadata.nameoperator: NotInvalues:- kube-system- cert-manager- konvu-attacher # webhook's own namespace

- We support namespace opt-in and opt-out, configurable via a Helm chart.

- This keeps the cluster stable by default; no surprises during cluster upgrades or webhook redeploys.

2. Managing Secrets Across Namespaces

The Problem

If the injected init container needs a Kubernetes Secret (e.g. API key, configuration file), it must exist in the same namespace as the pod it is injected in.

But:

- You may not know all target namespaces ahead of time

- You don't want to require manual secret creation everywhere

- Copy-pasting secrets via CI isn't scalable or secure

Our Approach

We implement dynamic secret provisioning:

- The webhook's ServiceAccount has scoped permissions to:

- Read the base secret in a central namespace (its own namespace actually)

- Copy it into target namespaces on demand

- Create the secret only if it's missing

- These permissions are tightly scoped via RBAC:

rules:- apiGroups: [""]resources: ["secrets"]verbs: ["get", "create"]resourceNames: ["konvu-sensor-secret"]

- All actions are audited and logged, and namespace filtering ensures we never mutate secrets arbitrarily.

Wrapping Up

Using mutating admission webhooks responsibly, with awareness of startup dependencies, RBAC, and namespace scoping, turns a clever hack into a battle-tested platform automation tool. Whether you're injecting security agents, sidecars, or runtime metadata, these practical guardrails make the difference between a helpful system and a broken cluster.