To kickstart our back-to-school Konvupero we decided to have a quick, maybe a bit controversial lightning talk to jumpstart discussions. The room was full, the charcuterie board was dangerously appealing, and my goal was to keep it brisk, a little opinionated, and possibly useful. The talk's thesis is bog simple: you don't need a framework to build an AI agent, especially not for v0. Build the loop, add the tools, measure, then earn the complexity.

What is an agent?

Definitions

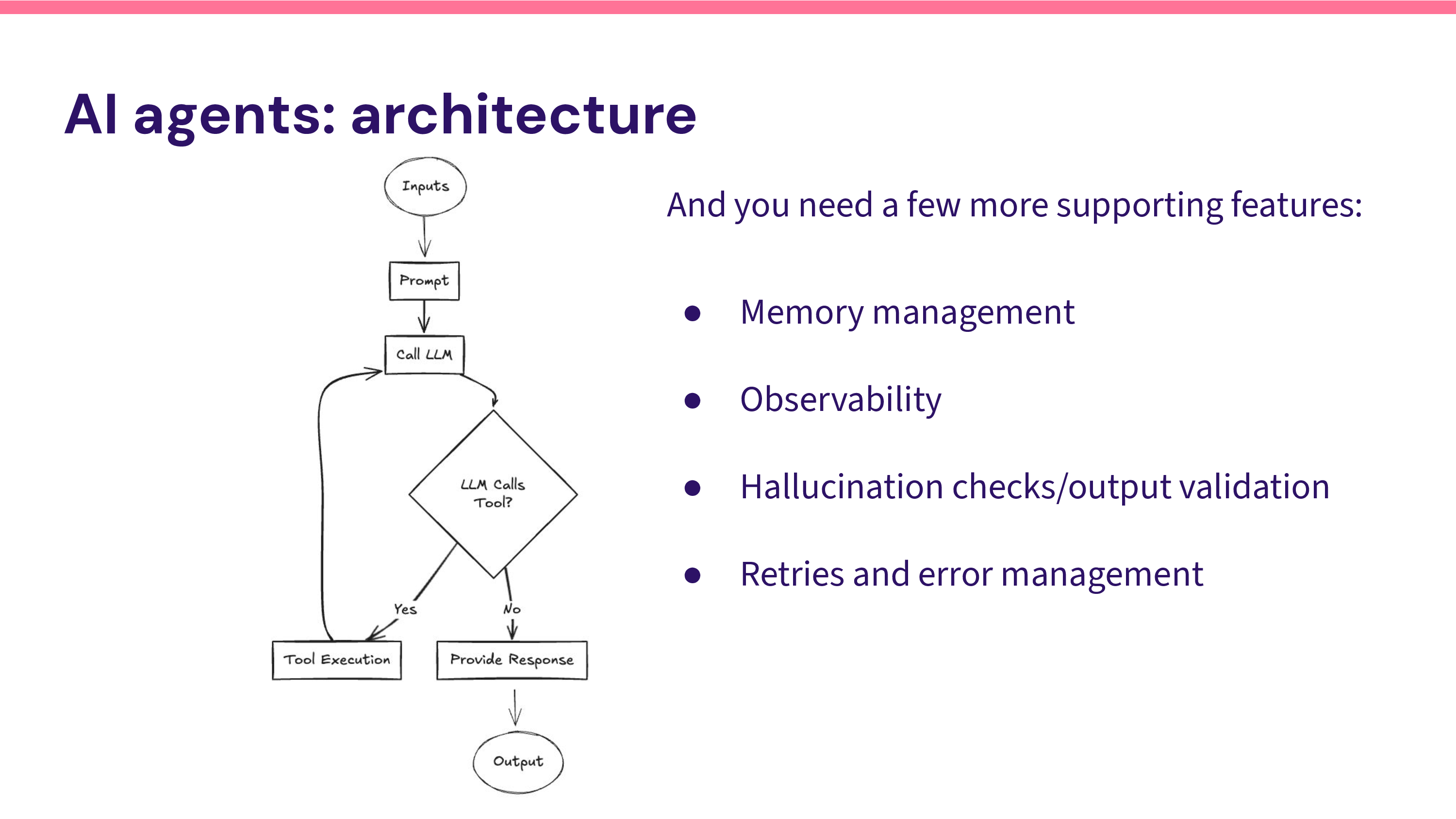

After the customary "Who am I" slides, I launched straight into two plain definitions to bound the conversation. First, an agent is a system that can pursue a task on its own. Second, in practice, an agent is a language model configured with instructions and tools. That framing will keep us honest: the "agent" isn't magic; it's a LLM plus a prompt and a toolbelt. The rest (memory, retries, tracing, etc.) is support scaffolding, not the essence.

Simplified Architecture

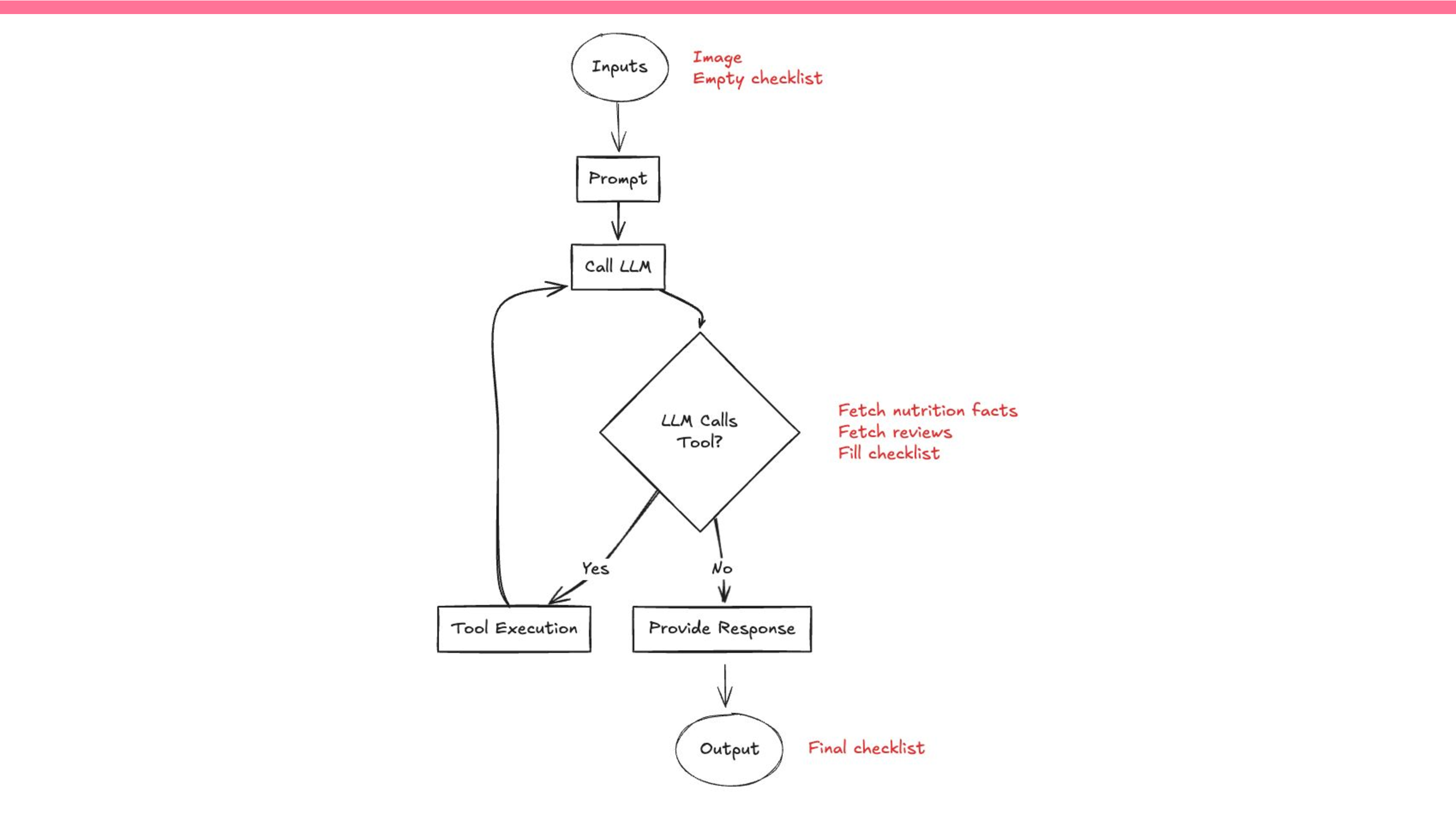

Under the hood, the loop is straightforward. Inputs go into a prompt; the model decides whether to call a tool; we execute that tool; we add the result back into context; repeat until we can answer. Around this, we layer practical concerns:

- Keep context trim,

- Observe/rate-limit so the LLM doesn't DoS your cloud,

- Validate outputs when they're checkable,

- Always handle retries; LLM services are not the most stable yet.

Frameworks are Premature Optimizations

None of the points mentioned above requires a framework to explain or to implement.

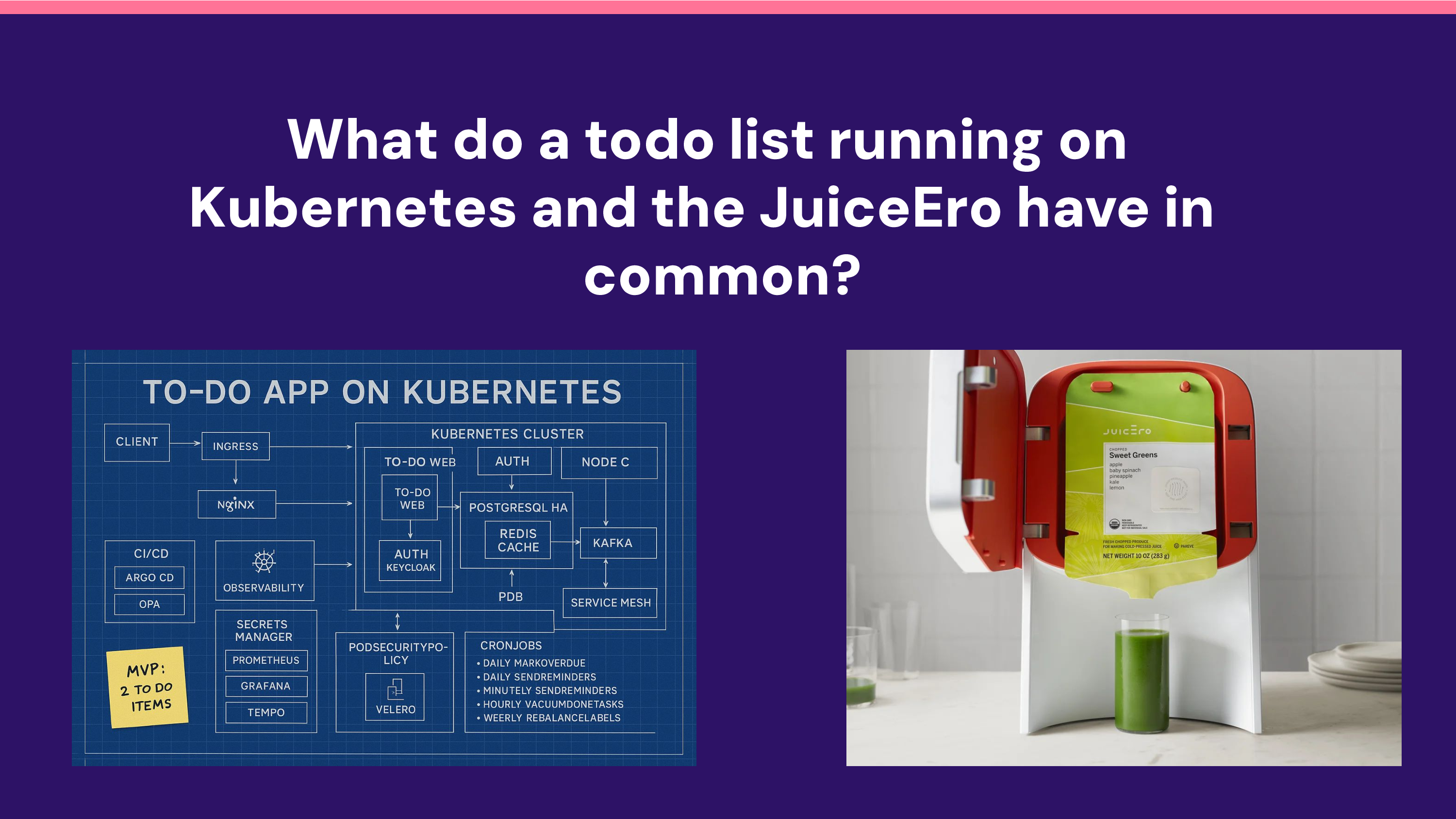

Running a todo list on Kubernetes and the Juicero both fail for the same reason: premature optimization. If your goal is "good bread with cheese," don't start by designing an IoT grilling protocol. If your goal is "ship an agent that solves a task," don't start by orchestrating a five-agent planner with speculative execution. Start with the dish, not the device.

Keep It Stupid Simple

The only reliable way to know if an agent will perform is to run it against real tasks. Without running it, it's hard to predict what it'll do. So we keep the first version small and observable, we gather failure cases, and then, only then, we earn complexity by addressing the specific reasons it misses. This is not austerity; it's proper sequencing.

Loops, Tools, and Telemetry

The pattern I recommend is a generic agent that can call a handful of tools, instrumented so we can learn. Once we understand where it fails, we can introduce more structured control flow or multi-agent patterns if they actually buy us something. But the default should be boring: a loop, a few tools, and enough telemetry to tell us what's happening.

Most "clever" patterns are expensive to build and even more expensive to validate. In practice, the simple agent will cover a surprising share of what matters. The last 20% have a nasty habit of ballooning your complexity budget; make sure it's worth it before you take on new abstractions.

The Hidden Cost of Framework Abstractions

Frameworks market themselves as orchestration for single or multiple agents, tool/function calling primitives, memory layers, schema enforcement, tracing, and model management. All of that sounds helpful. The catch is that most of it either mirrors what's already in the model APIs or wraps simple control flow you can write in the language you already use. Abstraction is only helpful when it removes incidental complexity without hiding essential complexity.

With all of them, you'll often find a second DSL that you have to learn. It'll help you to express… a loop. You trade the clarity of regular code for a graph builder and a bag of configurations that re-describe concepts your programming language already has: functions, branching, retries, and data structures. When the abstractions leak — and with agents they will — you'll end up reasoning in two languages.

Code Is the Most Precise Abstraction

My take is intentionally pointed: most of the features agent frameworks tout are redundant today. Tool calling, response formats, and even some memory patterns are supported directly by model APIs; the rest is straightforward glue. Code is the most precise way to express your agent's business logic. Precision matters when the model is already probabilistic. Why layer ambiguity on top?

"Mostly useless" doesn't mean "bad engineering" or "malicious." It means: in v0 and often v1, the complexity cost outweighs the benefit because you can get the same outcomes with simple, explicit code paths and the native APIs. If and when you truly need multi-agent topologies, complex control flow, or a standardized plugin ecosystem, that calculus can change. Until then, avoid the tax.

Illustrating this with an example: The Raclette Reviewer Agent

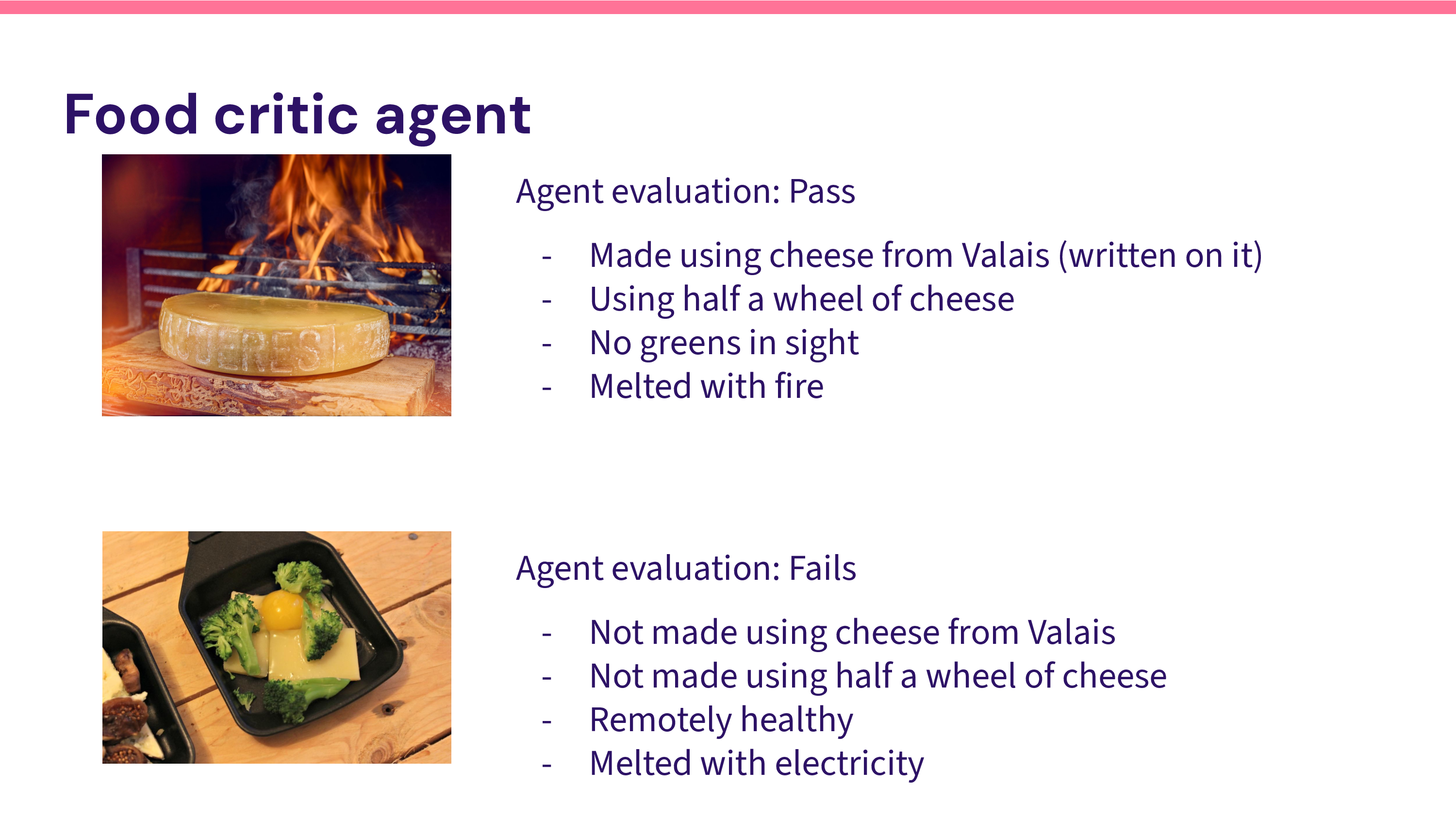

To keep this grounded, let's build a "raclette reviewer" (as someone of Swiss descent, I take my raclette very seriously 🧀) that takes an image and a checklist and outputs a pass/fail scorecard. The criteria are intentionally opinionated: cheese from Valais, half-wheel, no greens in sight, melted with fire. The point isn't cuisine; it's the pattern. Give the model a clear task, a tight input, and a way to verify.

The "architecture diagram" is almost offensively simple: prompt → model → maybe call a tool → add result to context → repeat until done. We augment with utilities like "fetch nutrition facts," "fetch a review," and a function that fills the checklist. Boring on purpose. It's fast to build, fast to debug, and fast to evolve.

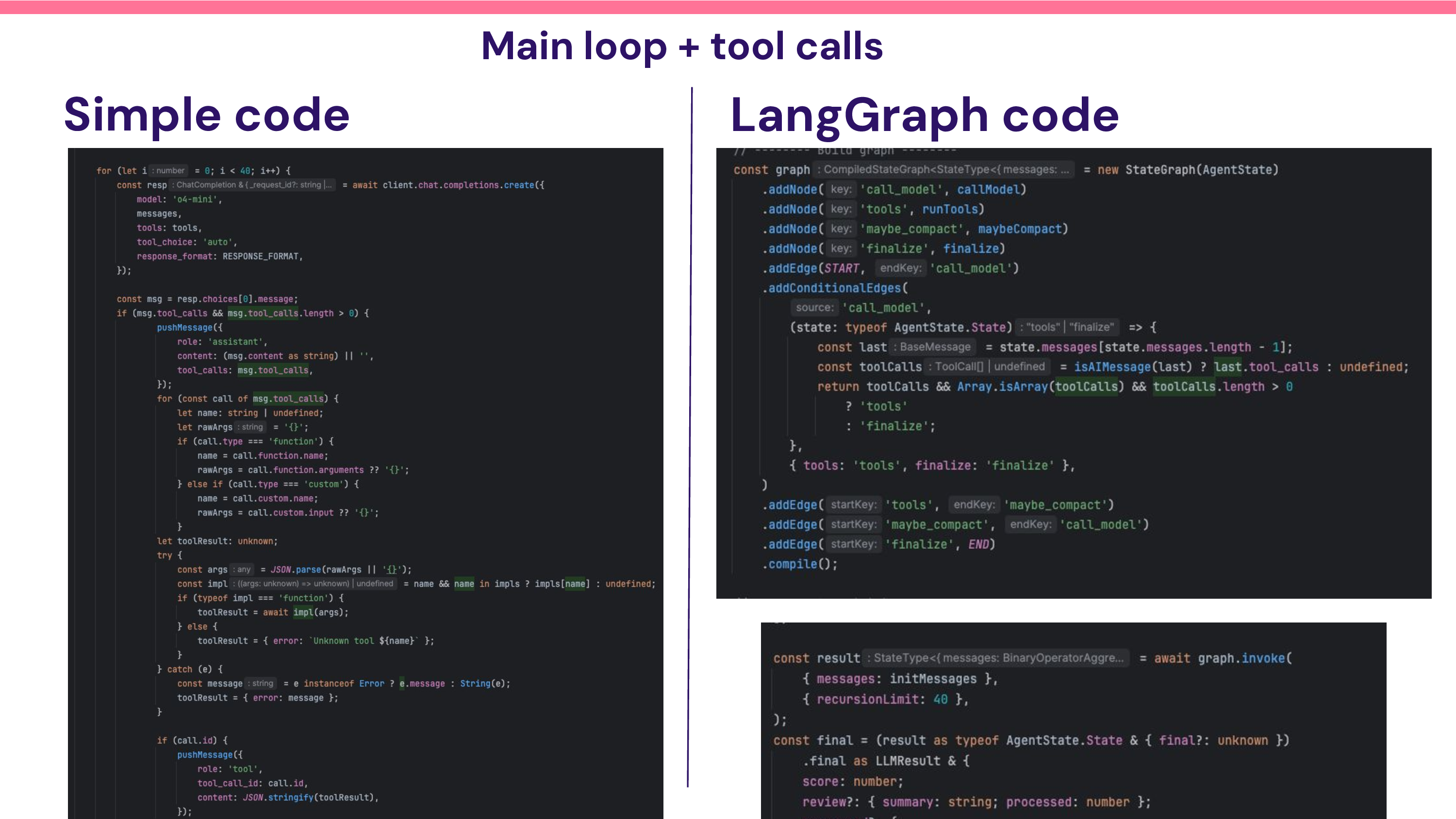

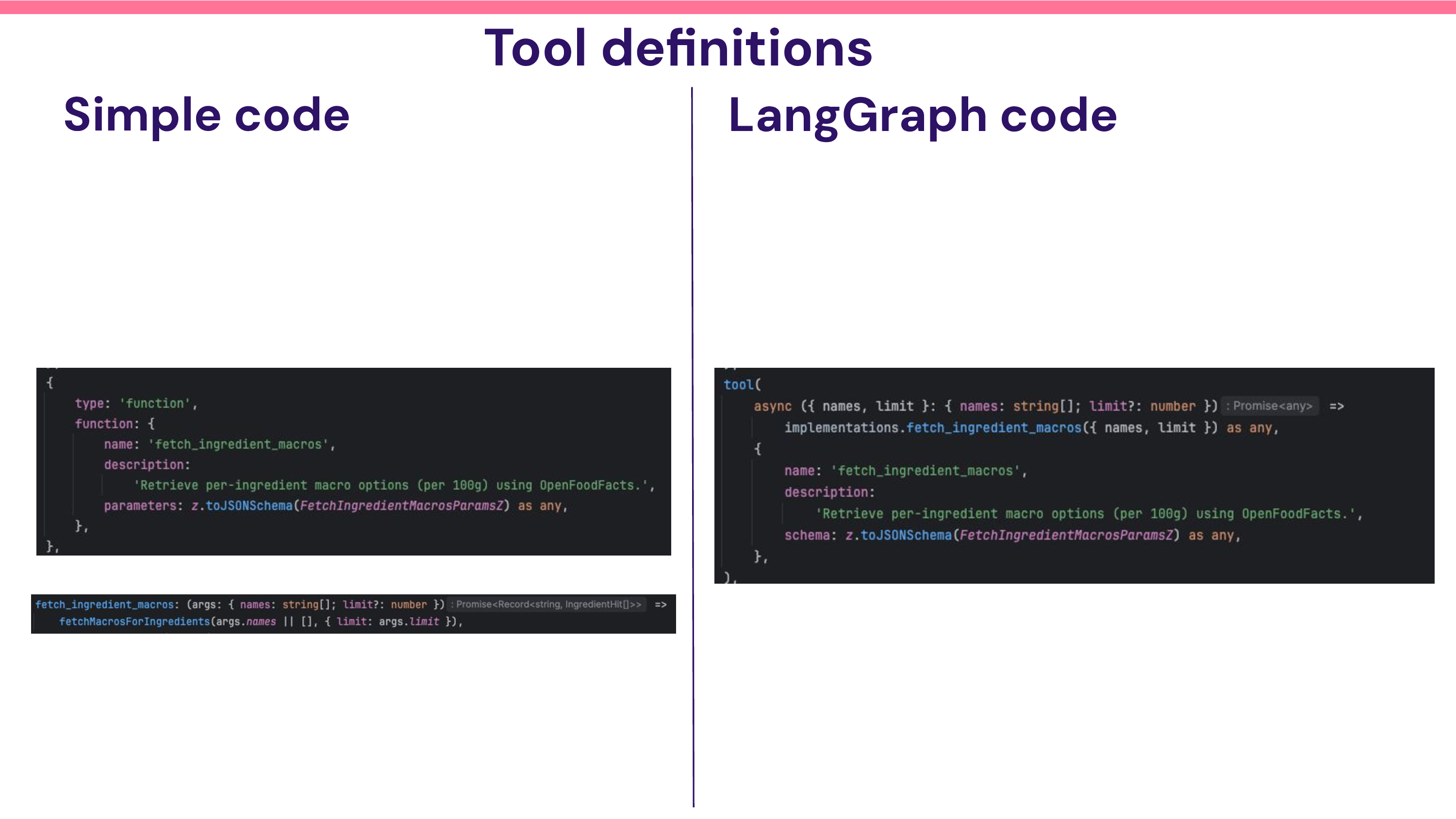

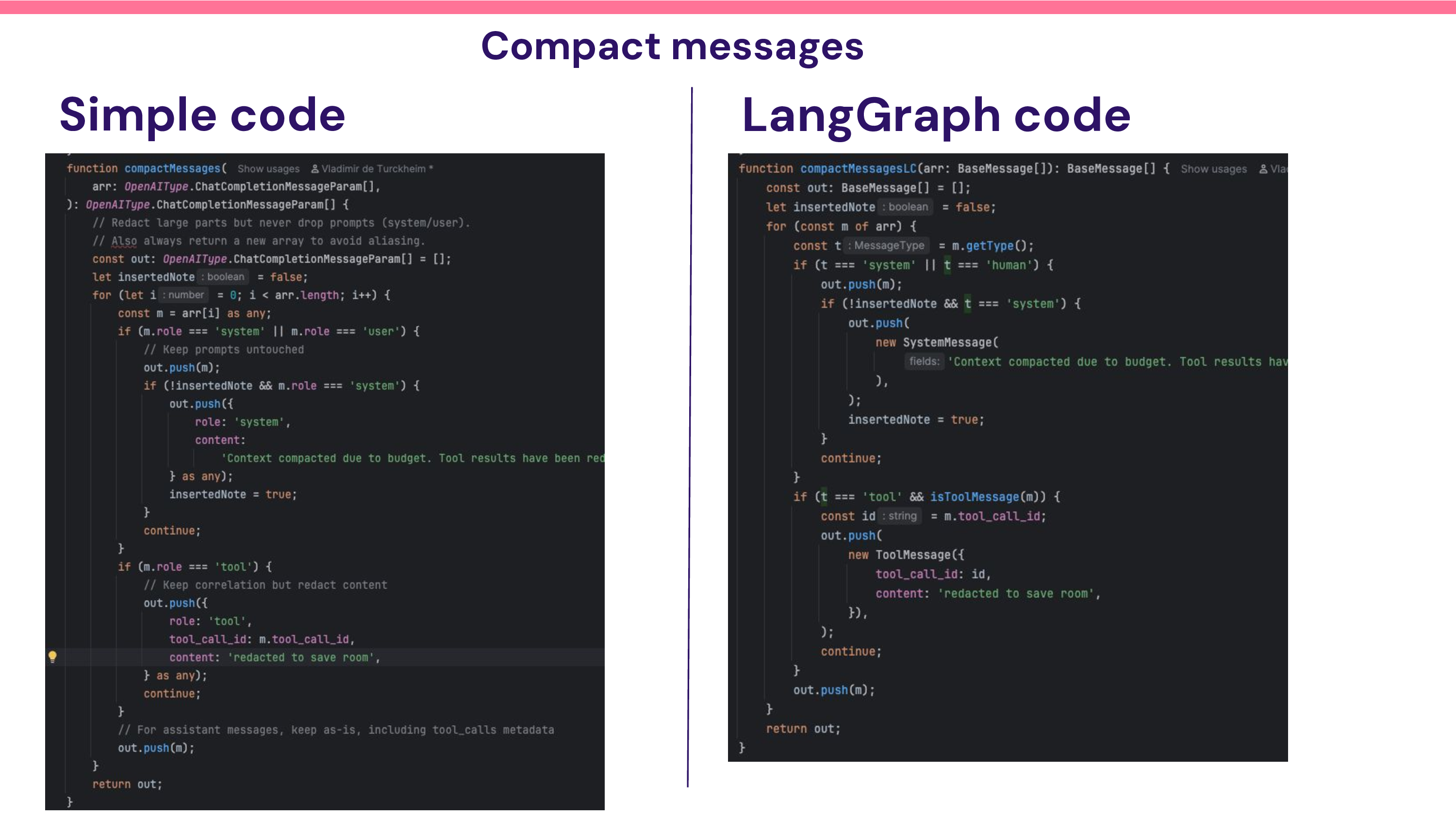

Side-by-side, the simple implementation is a handful of regular functions and a loop. The framework version is a state graph with nodes like call_model, tools, maybe_compact, and finalize, strung together with edges. Even if you like graphs, you're still just expressing the same loop in a more indirect way. I'd rather my teammates read and modify idiomatic code than memorize a second vocabulary.

Same story with tools. With native APIs, a tool is a typed function and a switch on the model's function call. In the framework, you write parallel declarations that mirror the same shape, plus glue to hand the result back. There's no functional gain — only another layer where things can go out of sync. When precision matters, fewer layers win.

Context compaction is often where "business rules" creep in. A generic "compact" node can't know what matters for your task; your code can. In the raclette agent, we might drop verbose review summaries but keep any nutrition facts that indicate unapproved greens. That's a local rule, and the safest way to encode it is right next to the loop, in code we own.

The common objections all have plain answers. Multi-agent orchestration? Functions calling functions is simply called programming. Memory in external stores? Use your usual DB/FS clients. Tracing? Hook the code you already run and ship spans/metrics to the observability stack you already pay for. Model management? Most providers speak the OpenAI-style API now; swap clients, not frameworks. RAG? Vector search doesn't require an agent framework to exist. The theme is consistent: let the building blocks be boring.

Conclusion: When do we need frameworks?

Frameworks shine when they standardize mature workflows and unlock ecosystems: think web frameworks after common patterns settled. We're not there with agents yet. Outside of conversational flows, we don't have stable categories of agent behavior with widely accepted recipes. If you standardize too early, you might freeze the wrong abstractions.

Today, most needs are covered by provider APIs, and the "graph tax" is real. The question isn't "is a framework cool?" It's "Is the complexity cost worth it right now for this team and this problem?" Start with code. If, later, the problem proves repeatable and you want to amortize those patterns across many engineers and services, revisit. Earn it with data, not vibes.

We're still early. That's exciting. The cost of exploration should be low, and the best way to keep it low is to use the simplest tool that works: loops, tools, a prompt, and discipline about measurement. If you're going to splurge, do it on the cheese, not the juicer.

Thanks again to everyone who joined the Konvupero kickoff, we'll keep it practical and keep it fun. If this resonates, come to the next Apero in Paris. We'll share the date soon, bring another conversation starter, and promise to keep the only thing between you and the charcuterie board as short as possible.

Oh, and if you’re interested in building framework-free AI agents to help with vulnerability management, we’re hiring.