The hamster wheel era of vulnerability management is ending

We mistook detection for progress

For the past decade, security has measured progress by how many vulnerabilities it could find.

More scanners, more alerts, more dashboards. Each promised clarity and delivered confusion.

That realization hit me hard after years at Datadog building cloud and application security products designed to detect vulnerabilities. CSPM, container image scanning, SAST, SCA, you name it.

One day, a leading online gaming company turned on the full suite. On our next call, their dashboard was on screen 350,000 vulnerabilities blinking back at us. The security lead paused and said, "What the f*** am I supposed to do with this?". When enterprise customers start using the F-word, you know something's badly broken.That moment stuck. Detection wasn't progress, it was paralysis.

Every year, enterprises drown under ever growing vulnerability backlogs. Security teams spend their days triaging issues they don't have the context to assess. Engineers receive floods of tickets for vulnerabilities that don't actually matter, over 85% are false positives. It's the security version of crying wolf. Eventually, no one comes running.

We built an industry obsessed with counting vulnerabilities instead of fixing risk. Detection created the illusion of control while pushing teams further behind.

More tools, more data, less clarity

Every new tool promised better security; what we got was more noise. Multiple scanners overlap across the same assets, each using different severity models. And unless you've spent nights reading the CVSS specification, CVSS:3.1/AV:N/AC:L/PR:N/UI:N/S:U/C:H/I:H/A:H makes about as much sense as hieroglyphics.

The more data we generated, the less understanding we had. And when the dashboards became overwhelming, we decided we had an aggregation problem. So we built new tools to aggregate all the old tools. Because clearly, if five dashboards full of problems weren't enough, one giant dashboard would do the trick. We didn't fix the noise, we just centralized it.

Every vulnerability required hours of reasoning: reading advisories, checking references, mapping conditions to their own code and infrastructure. Most of that work is manual and painfully repetitive. And yet it's where real security happens.

I've seen what happens when teams don't have the time or context to do that investigation well. In a recent conversation with a NASDAQ-listed company, their ops team had actually shut down a production EKS cluster after misinterpreting a vulnerability. Their security leader told me, "Every incident we've had in the last six months came from someone not understanding the vulnerability well and overreacting to what turned out to be a false positive."

AI just made the wheel spin faster

AI has supercharged both sides of the equation.

On one side, it's accelerating software creation: more code, more open source dependencies, more vulnerabilities. Models trained on public code inherit its flaws and replicate them at scale. AI is also helping scanners find even more issues, often business-logic flaws that traditional tools missed. In practice, though, that usually just adds more findings to already overloaded backlogs.

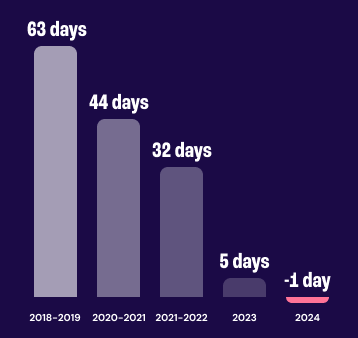

On the other side, attackers now use AI to weaponize new CVEs within days of publication.The time between disclosure and active exploitation is collapsing. Recent research shows the median time-to-exploit is now under -1 days, compared to 63 days just a few years ago.

The investigative work, the human reasoning required to decide whether a vulnerability actually matters, simply can't keep up.

As Verizon's Data Breach Investigations Report put it, we've entered "the vulnerability era."

We don't need more visibility. We need systems that can help solve the investigation bottleneck.

The next era of security: systems that reason, not just detect

Traditional tools detect and enumerate vulnerabilities. Konvu reasons about them.

It determines which vulnerabilities are exploitable and which aren't, providing evidence for both. This isn't about replacing scanners; it's about transforming what happens after detection.

Security teams can cut through the noise: less backlog, more focus, and triage decisions that come with proof. Not another scanner. Not a dashboard. A teammate that reasons.

Turning vulnerability data into exploitability intelligence

Every investigation starts with context around the vulnerability: what exactly would need to be true in the customer environment for this vulnerability to be exploitable? We don't rely on blind LLM prompting. Instead, Konvu builds and maintains a proprietary exploitability knowledge base, the result of careful research on each vulnerability. For every new vulnerability we extract and codify intelligence from:

- advisory text and vendor notes, including ambiguous or low-quality descriptions;

- vulnerability references and proof-of-concept artifacts;

- dependency graphs

- the specific commits that introduced or patched the vulnerable code

That raw intelligence is normalized into structured exploitability conditions (the "if-then" checklist an security engineer would write). Crucially, every entry in this knowledge base is produced by an AI+human loop: automated extraction proposes candidate conditions, and experienced analysts vet, correct, and annotate them. Over time that human-vetted corpus becomes a high-fidelity source of truth for what actually makes a vulnerability exploitable.

Reasoning about exploitability within enterprise context

Konvu agentic system uses that knowledge base as its reasoning substrate. Agents autonomously orchestrate targeted checks against the customer's environment — code paths, configurations, dependency versions, runtime indicators, and cloud or organizational metadata — to test whether those exploitability conditions are actually met.

This is where context changes everything. A CVE exploitable in one service might be harmless in another. A CVE marked "critical" can be irrelevant if the exploit depends on a specific OS version that you don't run, or if the risky code path is gated behind a library option that's never enabled in production. Conversely, a "medium" finding can become exploitable when an attacker-controlled input — for example an unvalidated request parameter — reaches the vulnerable sink.

Konvu reasons through these relationships automatically, drawing from the same cues an security engineer would but at scale and with reproducible logic.

Each conclusion is backed by concrete evidence: which exploit conditions were met, which weren't, and what data would be needed to confirm the remainder.

The result is not a fuzzy score, but a reproducible, evidence-backed judgment: exploitable, false positive, or inconclusive.

Every decision is traceable, auditable, and as rigorous as a human analyst. Only faster, and always on.

Accuracy and trust are the real challenges

Demos are easy. Making an agent reason reliably across messy, real-world enterprise environments is the hard part.

Getting there requires three pillars of trust:

- Accuracy: combining deterministic tools (dependency graphs, runtime sensors) with adaptive reasoning. The system must replicate how a human would weigh evidence, not hallucinate or guess.

- Explainability: every decision backed by proof: which exploitability conditions were met, which weren't, and why. Every reasoning step and data source is logged, so users can inspect, reproduce and challenge the result

- Autonomy: as confidence builds, agents progress from assisted triage to safe auto-dismissal and, eventually, remediation. But always with verifiable proof behind each action.

Trust isn't just a feature; it's the foundation. When security teams can see why an agent reached its conclusion, they start to rely on it. That trust is what unlocks the next generation of autonomous security software, software that doesn't just detect or decide, but acts with confidence and accountability.

We're building the teammate every security team has always needed

Vulnerability management doesn't need more dashboards, it needs more brain power. After years of detection-first tools, it's time to make security products intelligent and autonomous. That's what we're building at Konvu: AI agents that think like security engineers, investigate every vulnerability, and explain why it matters or why it doesn't, and then remediates the ones that matter

If you're a security leader buried under backlogs, or an engineer who wants to help shape the next generation of intelligent security software, we'd love to talk.

The next wave of security software won't just see vulnerabilities. It will understand them, and autonomously act with confidence.